Software

Before I went writing the software, I had to ask myself: is my hackish hardware

sufficient to differentiate between the various ways to touch the sensor? One of

the more intuitive ways to check that is if the measurements actually differ enough.

Time to record the measurements and plot some data!

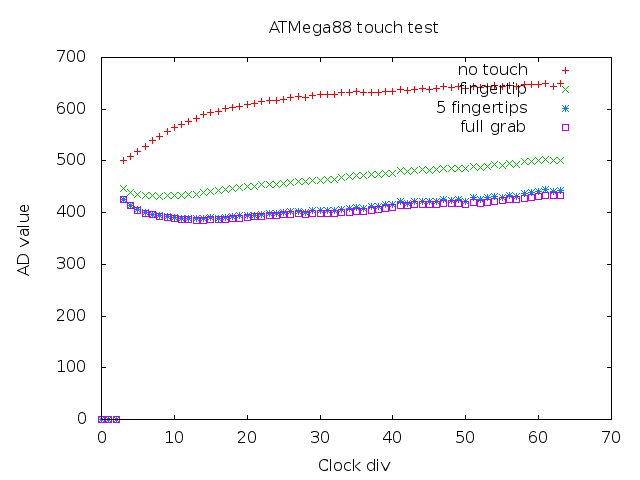

These are the raw measurements from the AVR. Vertically is the AD-value read from

the pin connected to the envelope detector, horizontally is the divider value the

PWM-generator uses. The frequenty actually is 10MHz/(div+1). As you can see, the

various methods to touch the sensor all have fairly distinct response patterns. A

machine learning algorithm should be able to differentiate between them without too

much trouble.

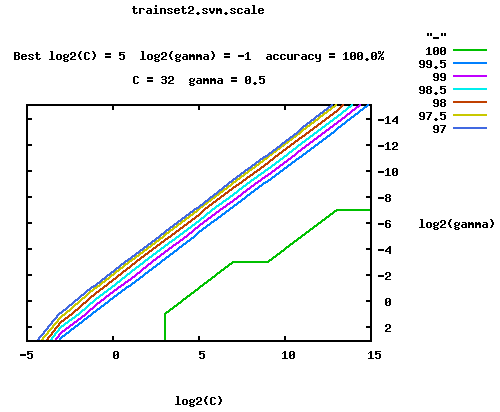

For the machine learning part, I decided to stick to a SVM-algorithm, just as the

Disney labs used. I could probably have used something else, like a neural network,

with the same results, but I have experience using

libsvm, a svm library which

is easy enough to use to complete the project quickly. It has a fully automated training

script, which will automatically spit out a model when you feed it a training file.

I modified my software to spit out the data in the required training file format

and recorded the sensor data for the four different classifications: no touch, one

finger only, five finger grab and full hand grab. I then ran the trainer on it, which

told me it should be able to distinguish between the classes with 100% accuracy:

After that, it was only a matter of adjusting my software to load in the model, scale

the incoming measurements and run the classifier on it.