Intro

Controlling RGB-leds never was difficult: just send a PWM signal to the red, green and blue LED and you have a color. Controlling multiple LEDs isn't that hard either: just throw a few mosfets in. Controlling a lot of RGB LEDs and having each one display a separate color is harder: you need to either put them in a matrix or have a chip next to each one. The second solution has become easier in time because of chips specifically designed to do the job. At first, you had Chinese LED-strips with a chip every three leds, with a SPI-like bus running along to set each three LEDs to a 15-bit color. Later, the chips got better and could display 24-bit color. Nowadays, we have chips that actually integrate the driver chip in the LED-package. An example is the WS2812: a nice and bright LED in a PLCC form factor, with just four pins for power and data. The integration of the chip in the LED even seems to make it the cheapest choice (at the time of writing) if you want something that's per-led addressable.

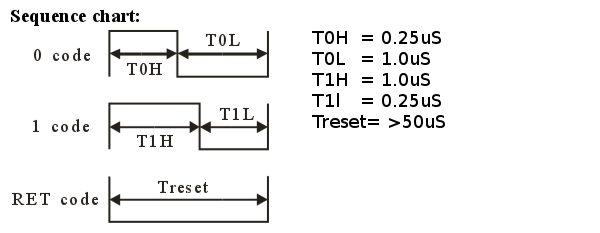

These chips also have a disadvantage. While other chips run on a kind of SPI-like protocol

whose signals are easily generated either using a microcontroller SPI-port or software bitbanging GPIO, the

WS2811 (the little controller die inside an WS2812 LED) uses a single-wire protocol, encoding the

ones and zeroes in the duration of the high pulse. 24 of these pulses set the intensity of the

red, green and blue element in the LED, and a stream these 24-bit signals provides each

LED with their individual color. This also means the pulses are quite timing-sensitive and high-speed:

This makes controlling the things in a non-realtime OS like Linux pretty hard. A program running under such a non-realtime OS like that would need to spit out a perfectly timed stream of those weirdly-encoded ones and zeroes. A context switch or an interrupt, however, could easily introduce a delay orders of magnitude bigger than the timing requirements. This would make the leds de-synchronize or reset, which introduces flicker or other randomness. This can be alleviated by handing the control of the LEDs to a secondary microcontroller, running without an OS to interfere with the timings. Ofcourse, this adds cost and due to the bandwith between the Linux-host and the microcontroller, framerate and/or the amount of controllable pixels can be limited.

Sometimes, however, you can work around the need to use CPU power to precisely time the signal stream needed. The OctoWS2811 library does that for Teensy boards, using some smart DMA magic to construct the stream almost without any CPU interference. The downside is that the Teensy is just an embedded board, and usually you need to connect it to something larger, e.g. a Raspberry Pi, to connect it to Ethernet or do other higher-level stuff. The idea to (ab)use the onboard hardware of a controller for this is a good one, however, and I wondered if there was a way to use something similar on a Linux-running device. My first thought was to use a Raspberry Pi with the DMA trick I used in my LED board controller build. Unfortunately, that didn't meet the tight timing requirements the WS2811 needs: the CPU gets priority over the DMA transfer sometimes, introducing a small but disastrous delay. So, the question was: Is there something else that can transfer bits from memory to IO-pins which does have strict timing capabilities? Turns out there's at least one thing: a video interface. Unfortunately, the one on the Raspberry Pi isn't documented, but there are other Linux-based boards available. For example, Olimex produces the Olinuxino-series of boards, which are open-hardware Linux-boards based a.o. on the very well documented iMX233-series. I managed to snag an oLinuXino Nano at an eBay auction for EUR18 and decided to use that.

1 Next »